Tag-driven reports enable triage prioritization. Learn how to enable the Reporting Test Driven Development (RTDD) workflow.

Introduction

The mobile market is quite mature. Most organizations are deeply invested in digital technologies that focus on Mobile, Web, IoT, and others. With this investment comes an increased risk of losing business due to poor quality, defects that leak into production, and late releases.

Among the challenges many enterprises face as it comes to assuring continuous digital quality are the areas of quality visibility, test planning and optimization, test flakiness, and false test results (false positives and negatives). Learn about some of these challenges, their root causes, and an approach to a digital quality insight that works.

Digital quality challenges

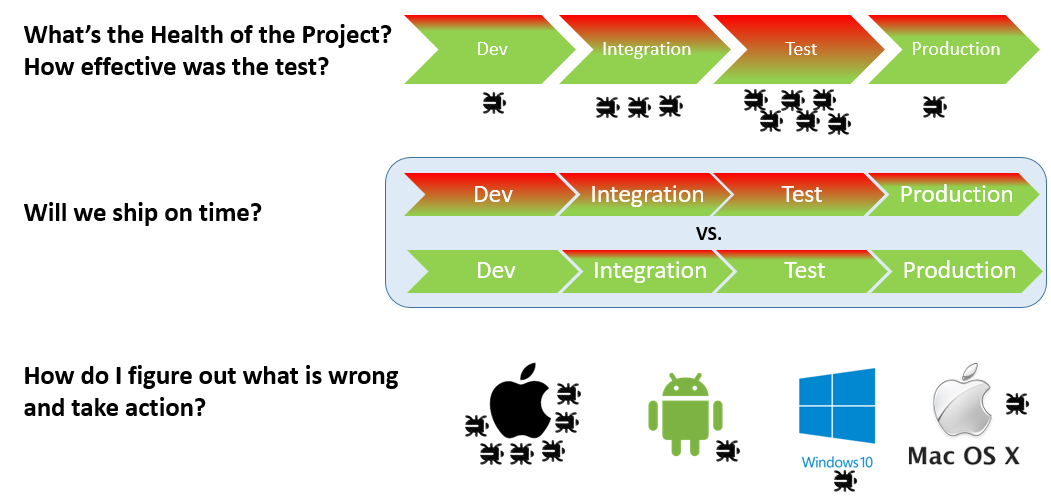

When Perfecto engages with customers in market segments like finance, retail, insurance, and more, the customers, typically, raise the following issues:

- Organizations find it hard to triage failures post executions (regardless of whether CI is involved or not).

- Planning, management, and optimization of test cycles based on proper insights is a significant challenge.

- Inconsistent test results and test flakiness are usually the root cause of project delays, product area blind spots, and coverage concerns in respective functional areas.

- Execution-based reports are too long to validate and examine. The ability to break long reports into smaller blocks is a necessary ingredient in faster triaging.

- An on-demand management view of the entire product quality, from top to bottom, is a hard-to-achieve goal, especially around large test suites.

- It is not possible to break long test executions into smaller test reports as a way to achieve faster quality analysis of issues.

The following image illustrates common challenges with digital quality.

Test analysis with Perfecto Smart Reporting

Perfecto Smart Reporting lets you optimize quality visibility for better test analysis. It empowers you to build structured test logic that can be used for test reports. In addition, Smart Reporting leverages tags built into the tests from the initial authoring stage, as a driver for future test planning, defect triaging, scaling continuous integration (CI) testing activities, and more. Smart Reporting lets you use the Reporting SDK and methodology within your framework and dev language of choice because this SDK supports authoring tests in Java, JavaScript, C#, Python, Ruby and HPE UFT (Legacy), with support for IDEs like Android Studio, IntelliJ, Eclipse, and XCode.

Embark on a deep-dive into the available tags and how you can best use them to achieve the following desired outcomes:

- Less flaky tests and stable execution

- On-demand quality visibility

- Test planning, management, and optimization

- Data-driven decision making

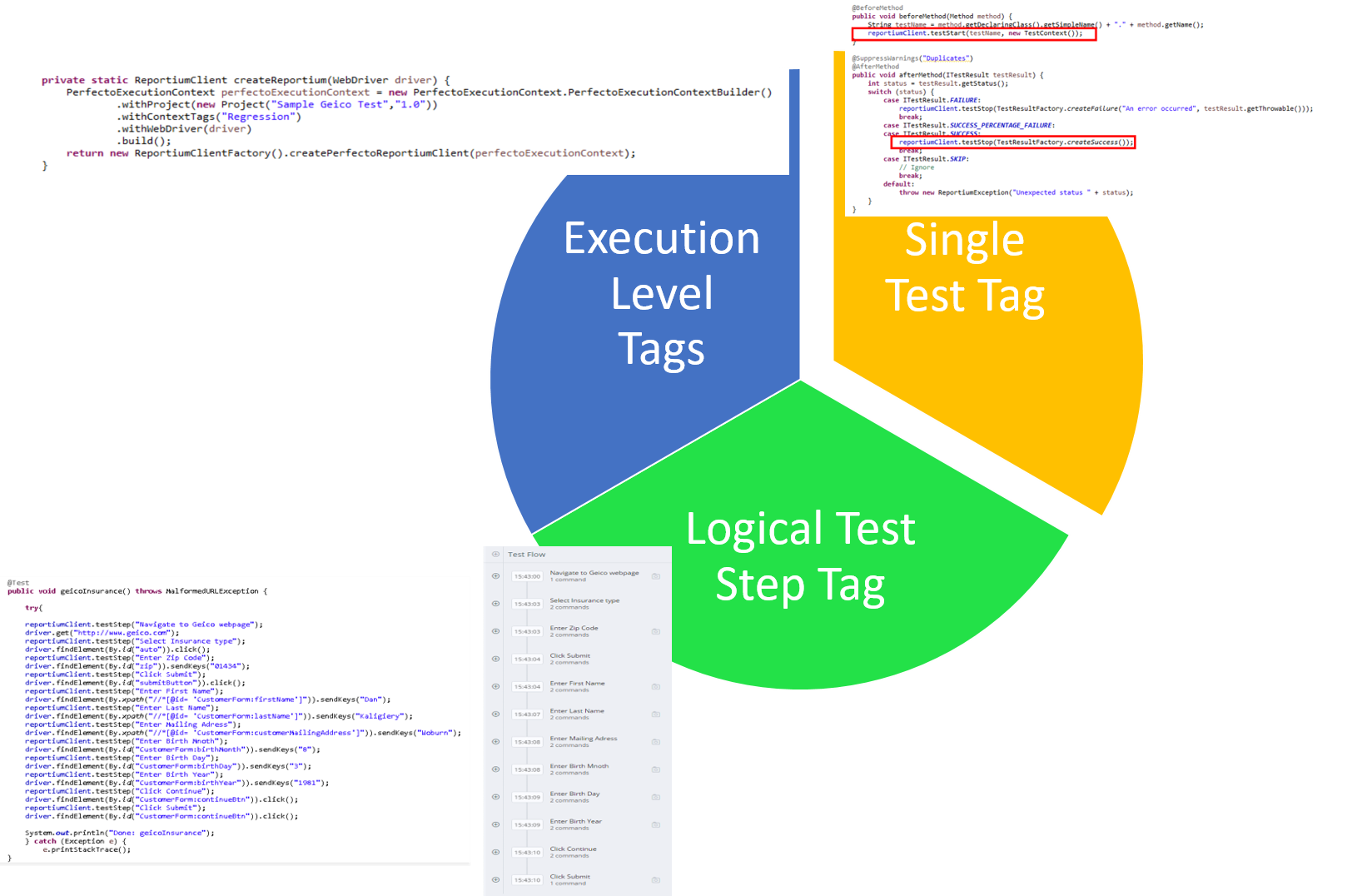

Suggested tagging for advanced digital quality visibility

When you start to build test execution suites, it is important to begin by preconfiguring custom tags that are meaningful and valuable to your teams. The tags should be classified into the following buckets:

- Execution level tags

- Single Test Report tags

- Logical step names

The following table shows suggested custom tags with classification analysis.

| Execution Level Tags Categories | Single Test Report Tags | Logical Test Steps | |||

|---|---|---|---|---|---|

| Categories | Examples | Categories | Examples | Categories | Examples |

|

Test type (Regression, Unit, Nightly, Smoke) |

“Regression”, “Unit”, “Nightly”, “Smoke” |

Test Scenario Identifiers |

“Banking Check Deposit”, “Geico Login” |

Functional Areas |

“Login”, “Search” |

|

CI |

Build number, Job, Branch |

Environmental Identifiers |

“Testing 2G conditions”, “Testing on iOS Devices”, “Testing Device Orientation”, “Testing Location Changes”, “Persona” |

Functional Actions |

“Launch App”, “Press Back”, “Click on Menu”, “Navigate to page” |

|

CI Server Names |

“Alexander” |

||||

|

Team Names |

“iOS Team”, “Android Team”, “UI Team” |

||||

|

Platforms |

“iOS”, “Android”, “Chrome”, “IOT” |

||||

|

Release/Sprint Versions |

“9.x” |

||||

|

Test Frameworks Associations |

“Appium”, “Espresso”, “Selenium”, “UFT” |

||||

|

Test Code Languages |

“Java”, “C#”, “Python” |

||||

Looking at this table, it would make sense for teams to define regression tags that only cover the relevant tests per the functional areas as recommended, in the Logical Steps column above, while each test step has a name to indicate what the test is doing. When implemented correctly, at the end of each execution, management and relevant personas can easily correlate between the high-level suite, the middle layer tested area and, finally, lower to the single test failure. Such governance and management of the entire suite can support better planning, triaging, and decision making.

With the use of proper tags, you can realize an additional benefit when implementing advanced CI. Perfecto’s Reporting SDK supports Jenkins and Groovy APIs for easy communication with the reporting suite. Filtering your test report by job number, build number, or release version can be simplified and provide proper insights on-demand.

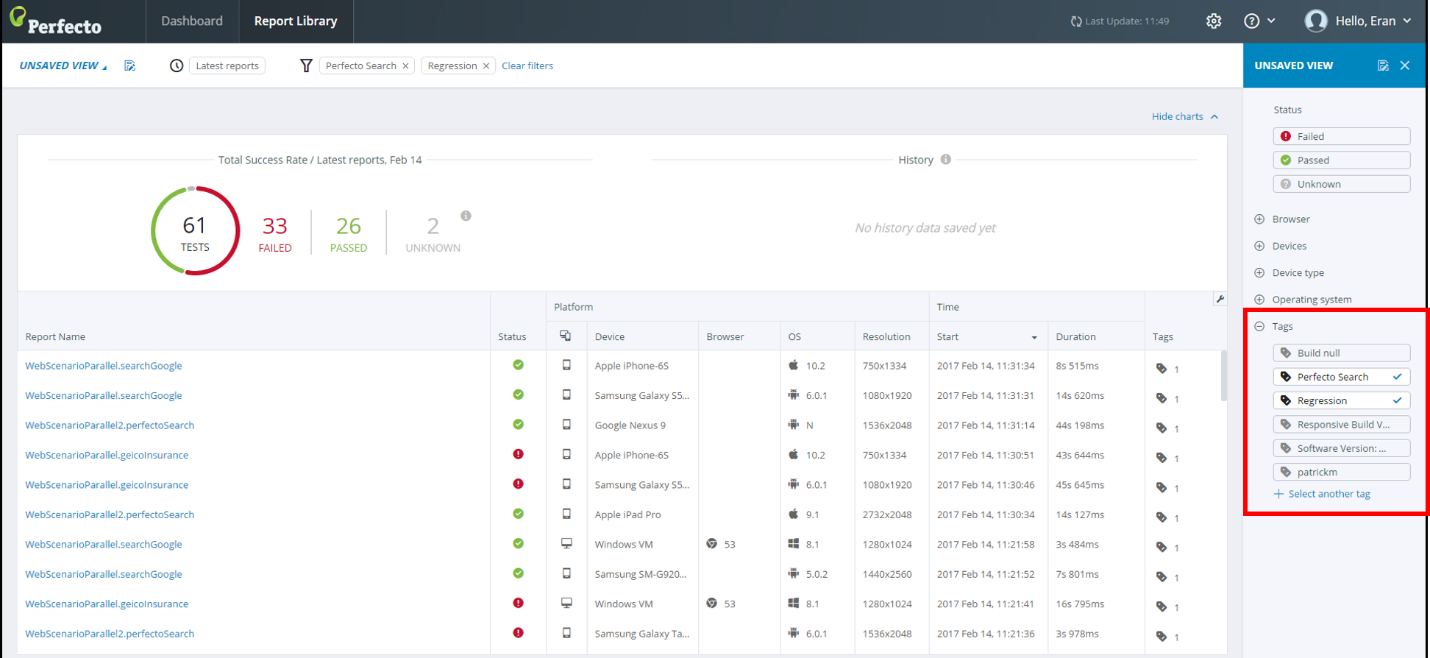

The following image shows a combination of 3 Smart Reporting tags.

1 | Get started with Smart Reporting and basic tagging

To start working with this technology, download the Reporting SDK and integrate it into your IDE of choice following the instructions provided in the Reporting SDK article.

You can select one of the following methods to download and set up Smart Reporting:

- Download the Reporting SDK directly (as a .jar file for Java, Ruby Gem, and so on), as described in Reporting SDK

- Set the required dependency management tool (Maven, Gradle, Ivy) to download it for you

The uniqueness of the Reporting SDK is its ability to “break” a long execution into small building blocks and differentiate between methods or smaller test pieces.

The result is an execution broken into digestible amounts of content instead of endless reports with hundreds or even thousands of commands.

Create an instance of the reporting client

Use the following code to instantiate the Reporting client in the test code:

@BeforeClass(alwaysRun = true)

public void baseBeforeClass() throws MalformedURLException {

driver = createDriver();

reportiumClient = createReportiumClient(driver);

}When you instantiate the reporting client in your test code through a simple call, as shown in the following code sample, the Reporting SDK allows the test developer to wrap each test with the basic commands: Per each annotated @Test that identifies a test scenario, you can use custom tags like "Regression", "Unit", or functional-area specific tags (by using the PerfectoExecutionContext class) and the following methods:

- testStart()

- testStep()

- testStop()

The following code sample shows a test that uses these methods.

@Test

public void myTest() {

reportiumClient.testStart("myTest", new TestContext("Sanity"));

try {

reportiumClient.testStep("Login to application");

WebElement username = driver.findElement(By.id("username"));

Username.sendText("myUser");

Driver.findElement(By.name("submit")).click();

reportiumClient.testStep("Open a premium account");

WebElement premiumAccount = driver.findElement(By.id("premium-account"));

assertTrue(premiumAccount.getText(), "PREMIUM");

premiumAccount.click();

reportiumClient.testStep("Transfer funds");

...

//stopping the test - success

reportiumClient.testStop(TestResultFactory.createSuccess());

} catch (Throwable t) {

//stopping the test - failure

reportiumClient.testStop(TestResultFactory.createFailure(t.getMessage(), t));

}

}Creating functional tests that do not utilize tags as a method of pre/post-test execution analysis and triaging usually results in lengthy and inefficient processes. Marking a set of tests with a WithContextTag makes it much easier during test debugging and test execution to filter the corresponding tests relevant to that tag (in the following example, we use a “Regression” tag).

In the same way, you can gather tests under a testing type context named "Smoke", "UI", "CI", or other but also signify specific tests that cover a specific functional area such as Login, Search, and more. These tags help manage the test execution flows and the results at the end, and they gather insights and trends throughout builds, CI jobs, and other milestones.

Creating context tags is a key practice toward fast quality analysis and test planning.

The following code sample includes generic tags on the Driver (entire execution) level. They are added automatically to each test running as part of this execution.

PerfectoExecutionContext perfectoExecutionContext = new PerfectoExecutionContext.PerfectoExecutionContextBuilder()

.withProject(new Project("Sample Reportium project", "1.0"))

.withJob(new Job("IOS tests", 45))

.withContextTags("Regression")

.withWebDriver(driver)

.build();

ReportiumClient reportiumClient = new ReportiumClientFactory().createPerfectoReportiumClient(perfectoExecutionContext);To add tags to a single test, we use the TestContext class and create the instance when starting the specific test. These are specific tags on the single test (method/function) level. They will be automatically added to this test only.

Compared to the entire execution-level tags demonstrated in the previous code sample, the use of tags within a single test scenario would look as follows:

@Test

public void myTest() {

reportiumClient.testStart("myTest", new TestContext("Log-in Use Case", "iOSNativeAppLogin", "iOS Team"));To get the report post execution as URL and drill down, you would need to implement the following code:

String reportURL = reportiumClient.getReportUrl();

System.out.println("Report URL - " + reportURL);When using tags within a single test, as shown above, it is possible to distinguish and gain better flexibility when running a test in various contexts and under different conditions.

If you use the TestNG framework, we strongly recommend to work with the TestNG Listener so all report statuses get reported and aggregated automatically, in the following way:

@Override

public void onTestStart(ITestStart testResult) {

if (getBundle().getString("remote.server", "").contains("perfecto")) {

createReportiumclient(testResult).testStart(testResult.getMethod().getMethodName(),

new TestContext(testResult.getMethod().getGroups()));

}

}When leveraging TestNG, you need to implement the ITestListener.

All test status results are reported through the following method:

@Override

public void onTestSuccess (ITestResult testResult) {

ReportiumClient client = getReportiumClient();

if (null != client) {

client.testStop(TestResultFactory.createSuccess());

logTestEnd(testResult);

}

}

@Override

public void onTestFailure (ITestResult testResult) {

ReportiumClient client = getReportiumClient();

if (null != client) {

client.testStop(TestResultFactory.createFailure("An error occurred",

testResult.getThrowable()));

logTestEnd(testResult);

}

}2 | Implement tags across functional application areas/test types

Now that we are clear on the environment setup to use Smart Reporting, let’s understand how to structure a winning test suite that leverages tags and supports better planning and insights.

Perfecto created a getting started project example (found in the Perfecto GIT Repository) that uses a set of RemoteWebDriver automated tests on Geico’s responsive web site running via TestNG on 3 platforms (Windows, Android, and iOS).

If you look at the example, you can see that adding a simple method with a tag called Regression, as seen in the following code sample, can help you start building better tests and triaging failures.

private static ReportiumClient createReportium(WebDriver driver) {

PerfectoExecutionContext perfectoExecutionContext = new PerfectoExecutionContext.PerfectoExecutionContextBuilder()

.withProject(new Project("Sample Geico Test", "1.0"))

.withContextTags("Regression")

.withWebDriver(driver)

.build();

return new ReportiumClientFactory().createPerfectoReportiumClient(perfectoExecutionContext);

}When the above Regression tag is included in the test class, any test cases like the above are added and grouped under that tag.

One of the common use cases for using tags is the need to generate the same context for a group of test cases that are not executed from within CI and are used for other quality purposes. Another good example is setting the release version or sprint number so that it can later be used for comparison and trending illustration.

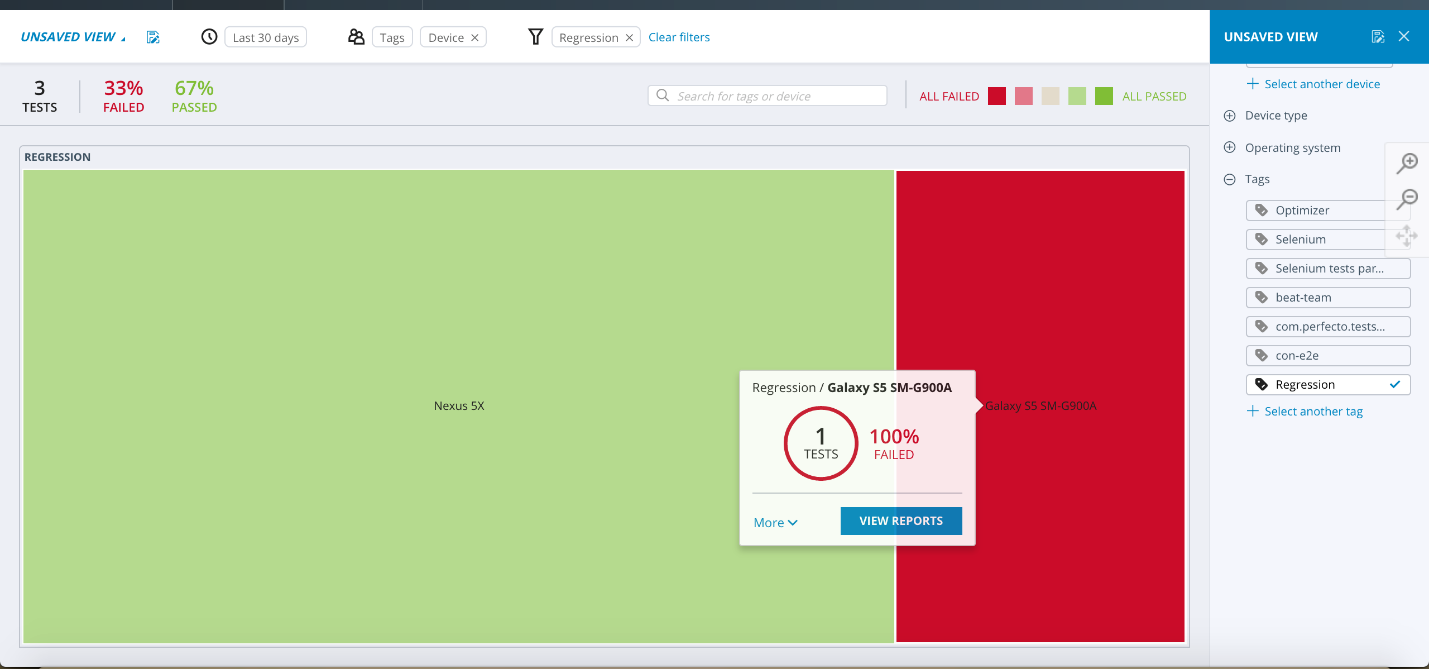

Drill down to a failure root cause analysis using tags

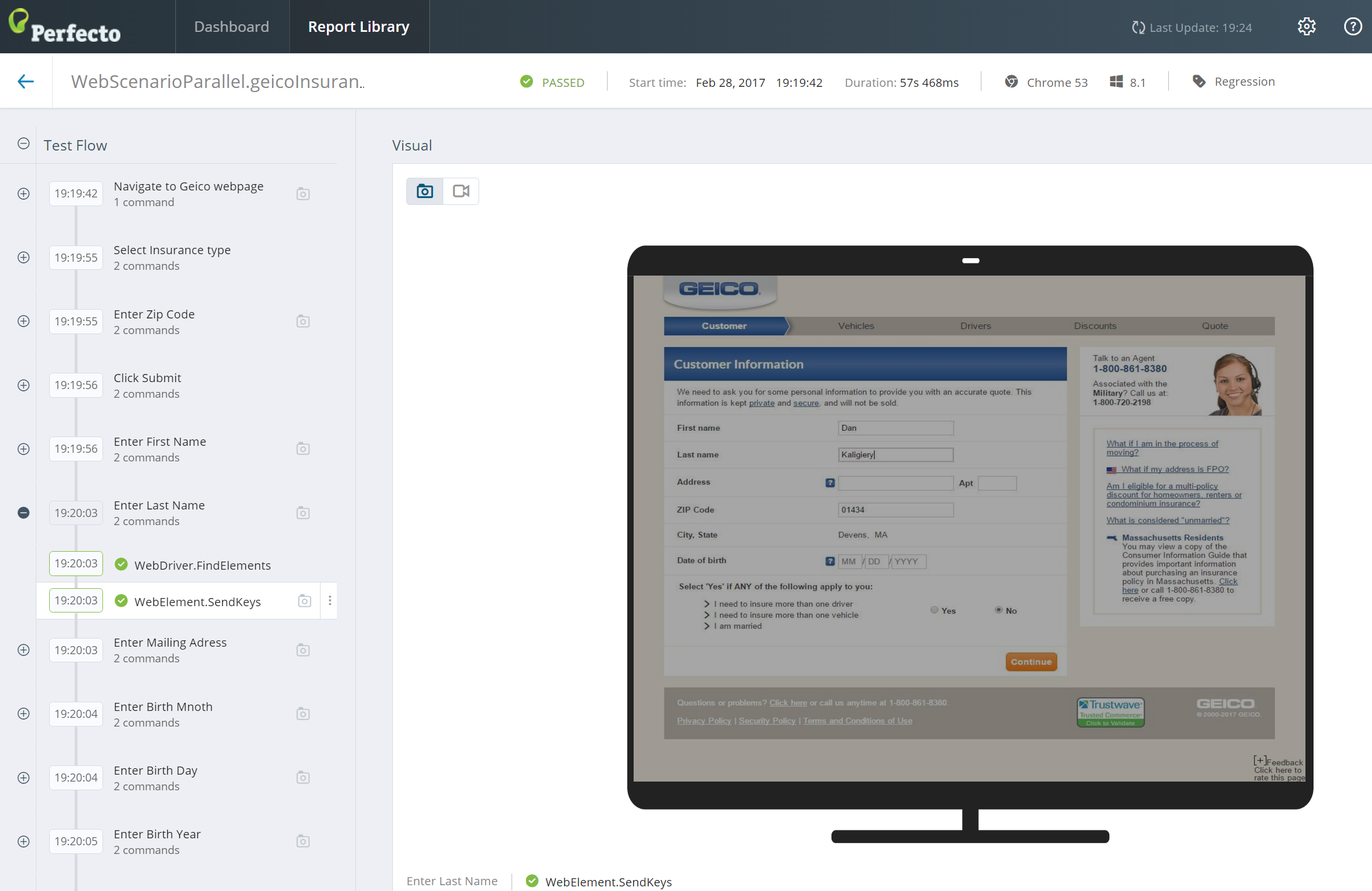

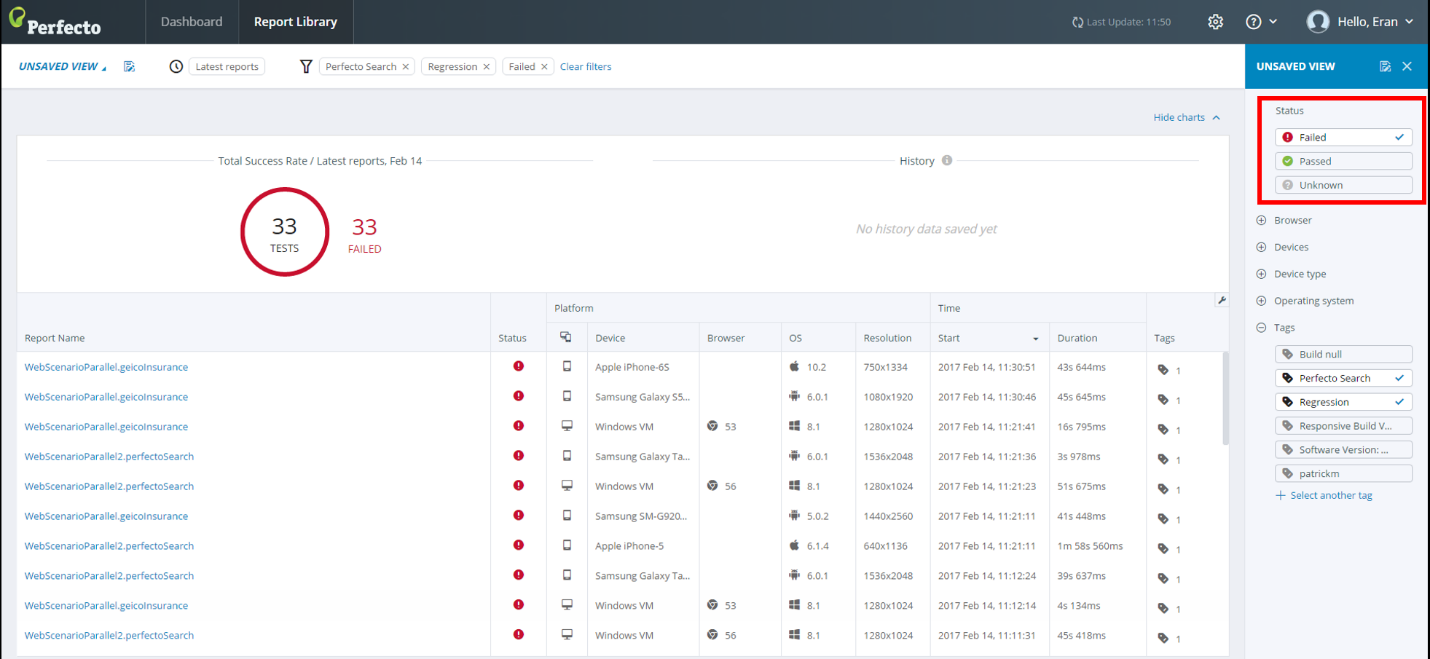

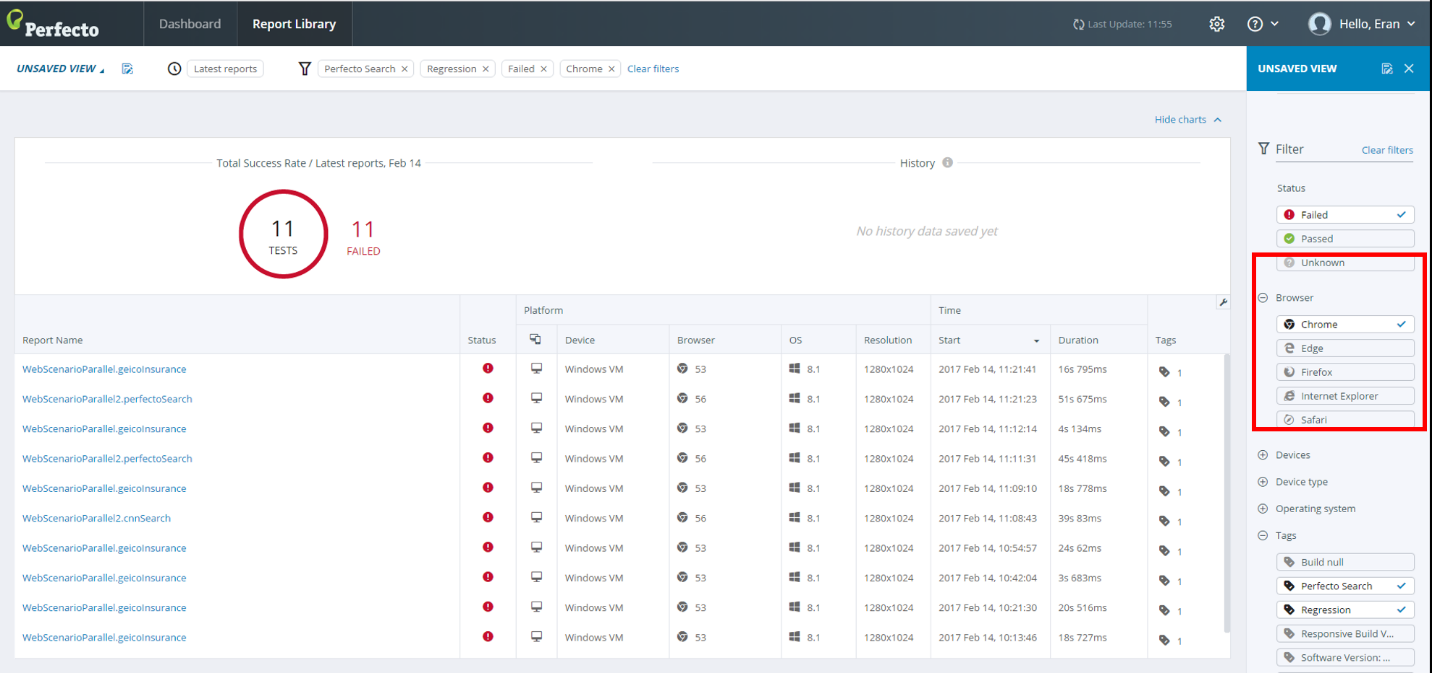

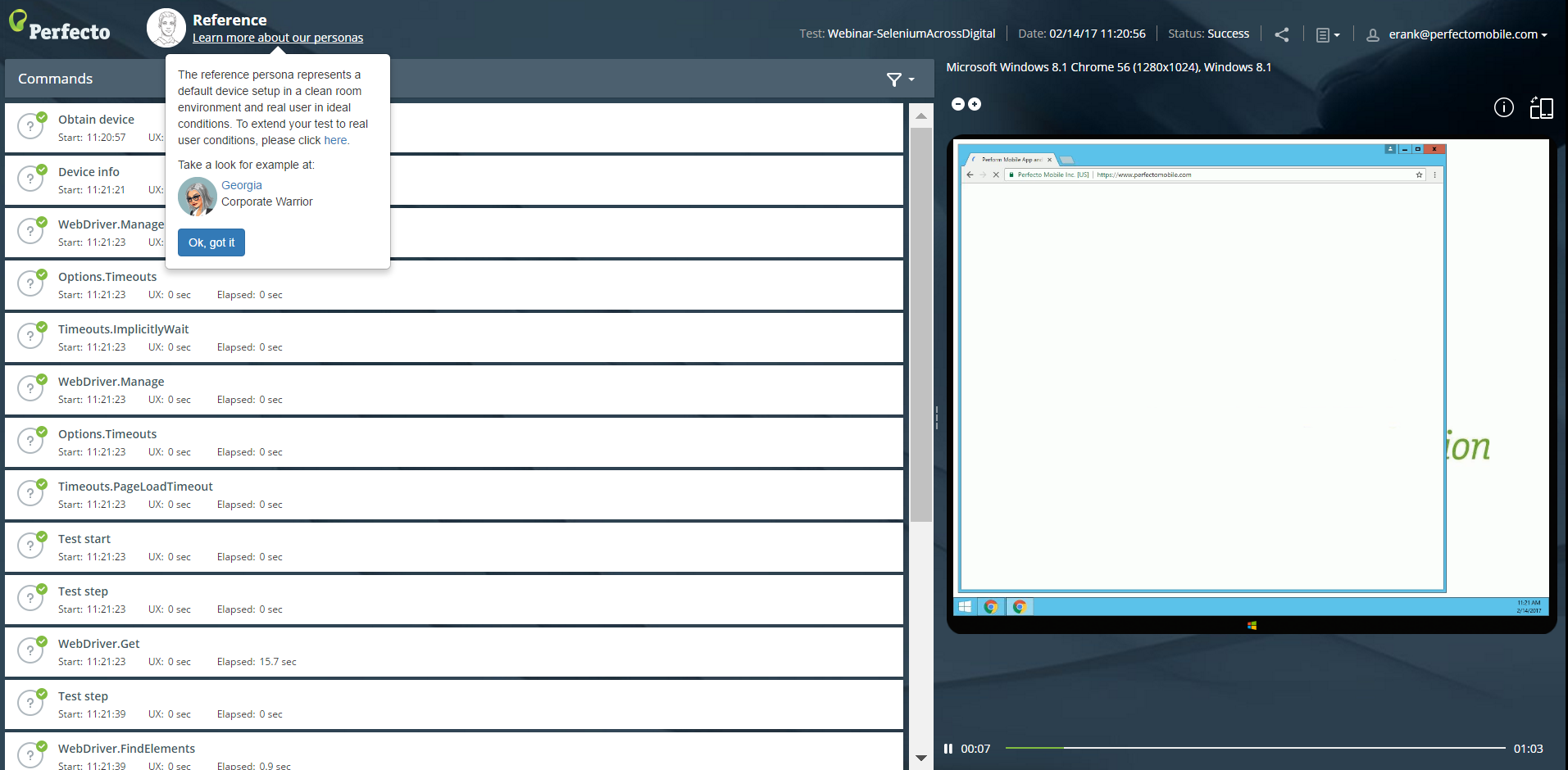

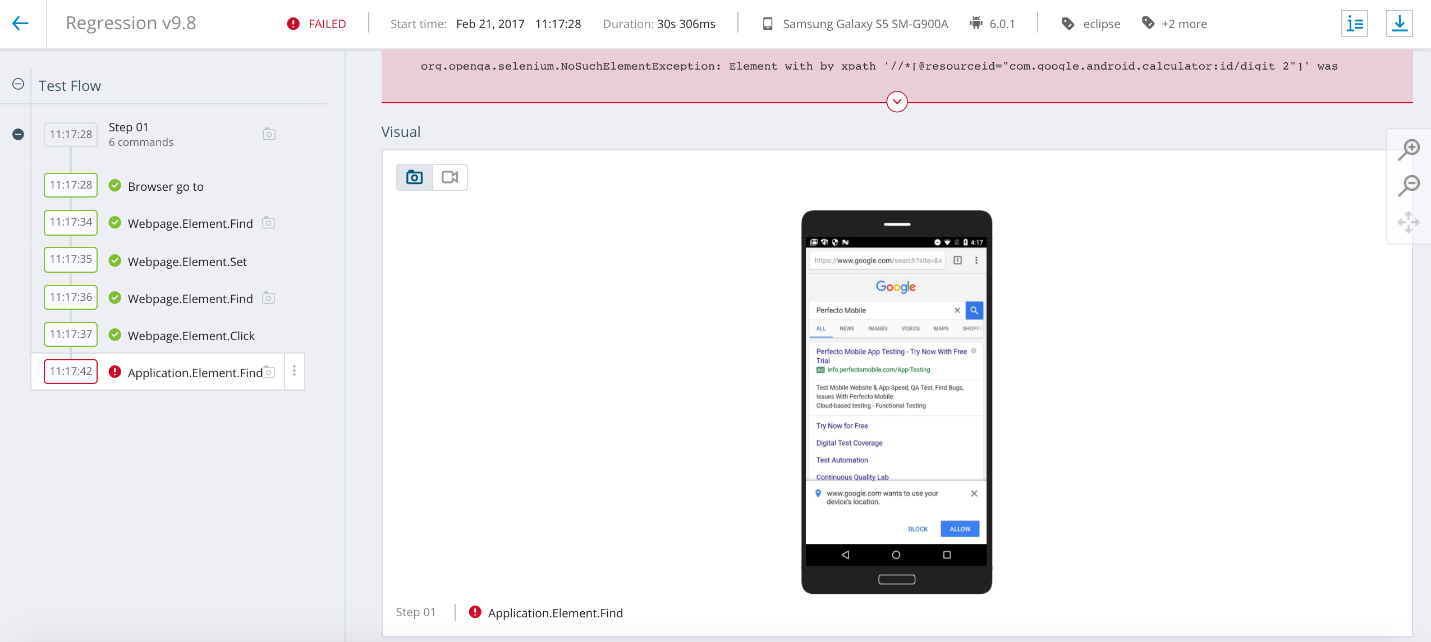

In the following example, we use the pre-defined Regression tag as part of our triaging to isolate the real issue. the following images demonstrate the entire process up to the test code itself.

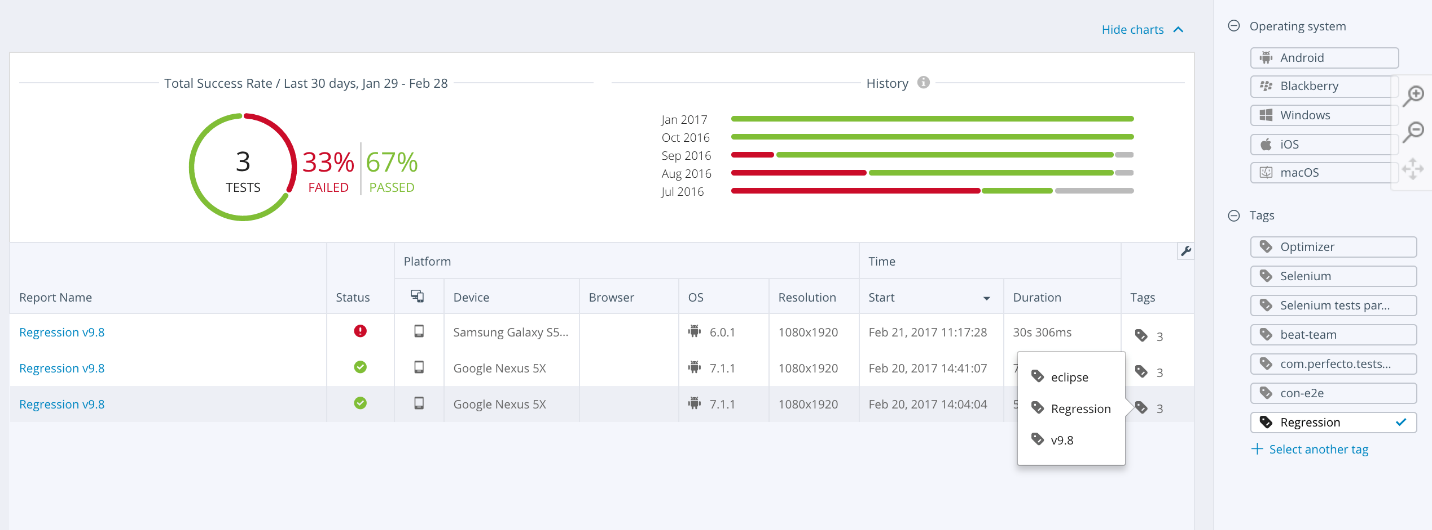

The above dashboard view enables filtering the entire suite to display only the results relevant to the Regression test scenarios. Moving the pointer over the failures bucket allows drilling down to the actual report library (grid) as shown in the following figure.

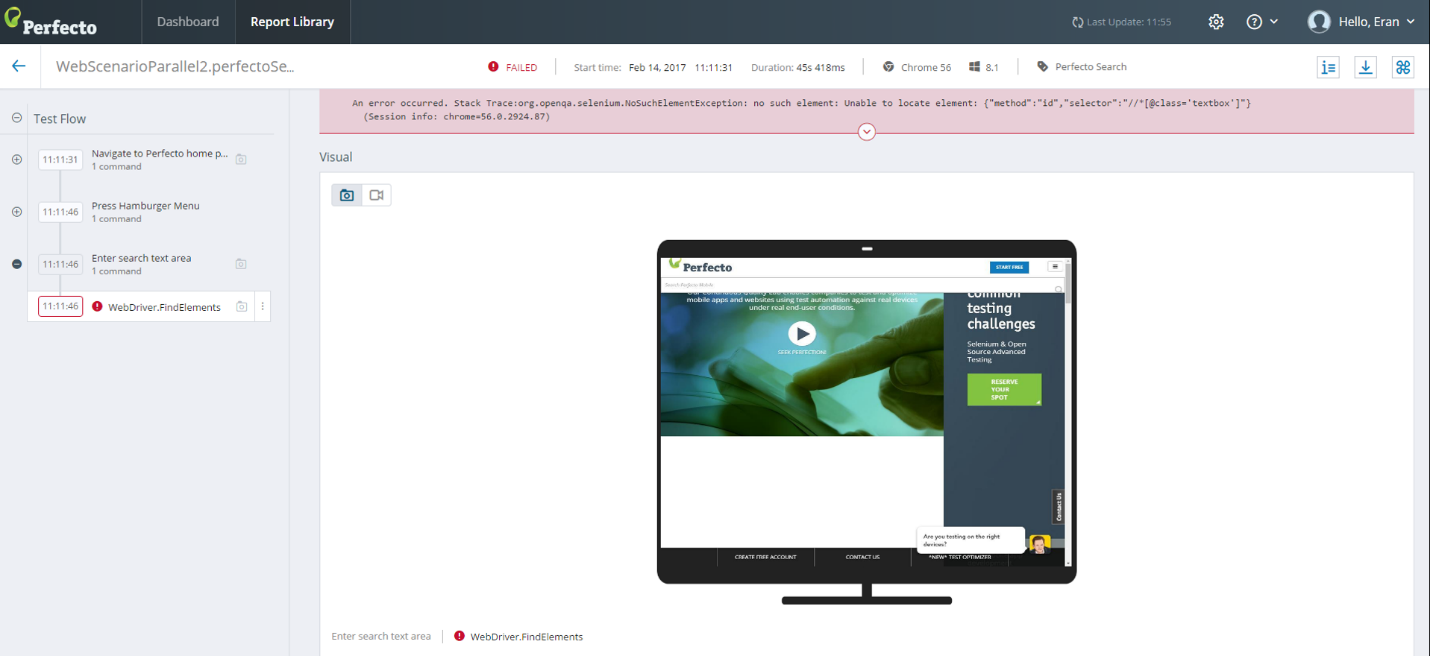

In the above grid view, you can access a single test report and filter through the execution steps as needed. If we examine the following figure, we can see a correlation between the test flow steps and the code in the following code sample.

// Test Method, navigate to Geico and get insurance quote

@Test

public void geicoInsurance() throws MalformedURLException {

reportiumClient.testStep("Navigate to Geico webpage");

driver.get("http://www.geico.com");Obviously, when using such tags in the report, having the ability, post execution, to also group these tests by tags and a secondary filter-like target platform, such as Web or Mobile, can add an additional layer of insight.

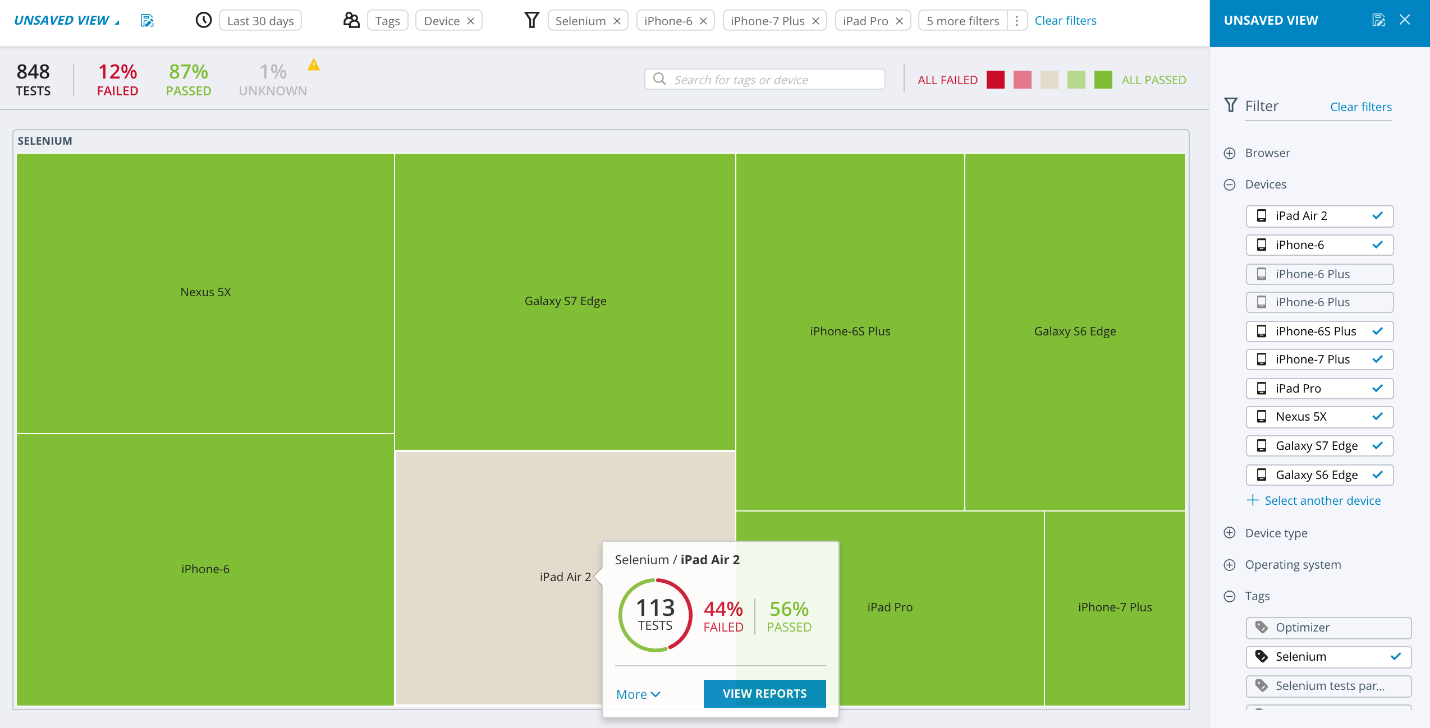

Group tests by tags and more

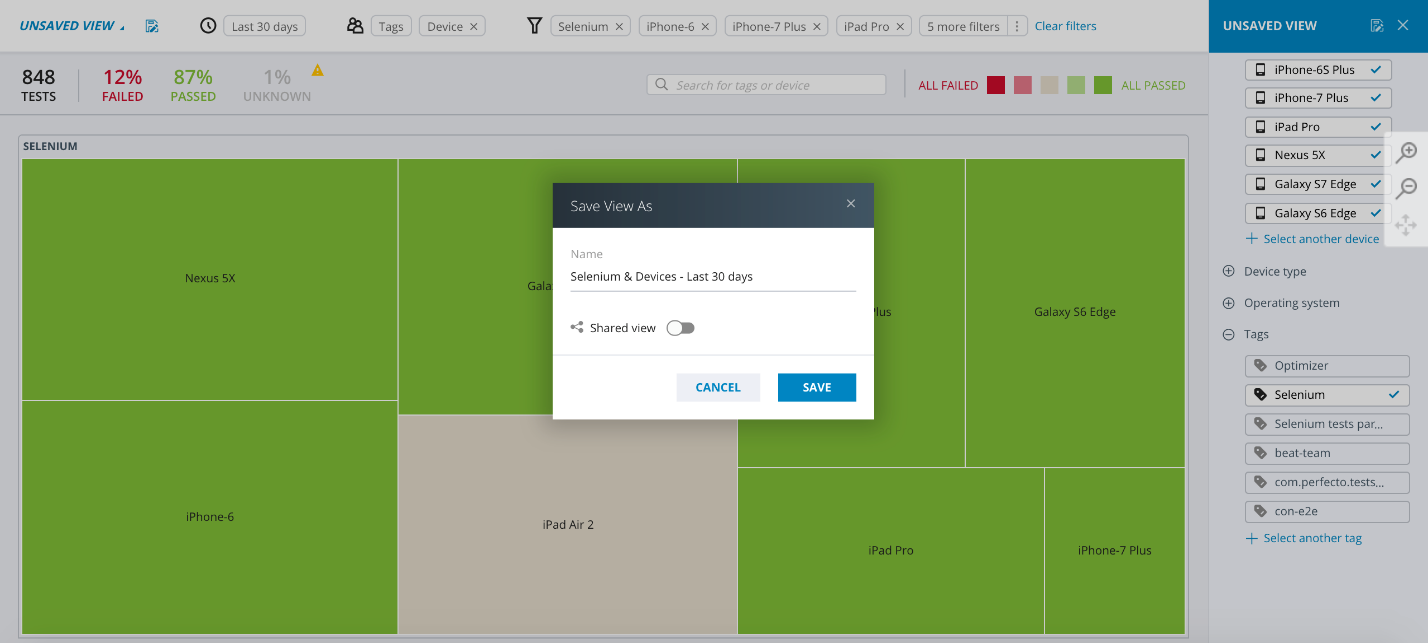

The custom view shown in the following figure has two levels of group-by: Tags and Devices. As you can see, we included both Selenium tag (you can include/exclude tags via the filter as needed) and created a filter by specific devices of interest to us. With that, we received a custom view that merges both the Selenium tag as well as the relevant devices that we wish to examine.

Assuming the view created in the above figure fits the organization and various personas as well as offers the right quality visibility needs, the Smart Reporting feature supports saving this custom view either as private or shared view for future leverage (as shown in the following figure).

Consider building a triaging process that takes into consideration multiple custom views that supports the quality goals of the project. In addition, the custom view report creation phase is the right step in the triaging process to identify any existing gaps in your tags and reporting test-driven development implementation.

3 | Implement multiple tags across several applications

Now that you understand how to set up Smart Reporting and work with the supported SDK methods (testStep, testStart, testStop) and tags, we can scale the method to multiple applications and various test scenarios and use cases.

As a first step, let’s create a new test class and include a new tag name.

In this specific case, what we created was a simple search test within the Perfecto responsive web site. The test opens the Perfecto website, navigates to the search text box, and perform a search on the word “Digital Index”. This test is added to the existing testng.xml file used to execute the above Geico example. As you can see in the following figure, there is an implementation of the above scenario with a newly added tag named “Perfecto Search”.

private static ReportiumClient createReportium(WebDriver driver) {

PerfectoExecutionContext perfectoExecutionContext = new PerfectoExecutionContext.PerfectoExecutionContextBuilder()

.withProject(new Project("Sample Perfecto Test", "1.0"))

.withContextTags("Perfecto Search")

.withWebDriver(driver)

.build();

return new ReportiumClientFactory().createPerfectoReportiumClient(perfectoExecutionContext);

}

// Test Method, navigate to Perfecto Web Site

@Test

public void perfectoSearch() throws MalformedURLException {

reportiumClient.testStep("Navigate to Perfecto Home Page");

driver.get("http://www.perfectomobile.com");

reportiumClient.testStep("Press Start Free button");

driver.findElement(By.xpath("//*[text()='Start Free']")).click();

reportiumClient.testStep("Enter my first name");

driver.findElement(By.id("First Name")).sendKeys("Eran");

reportiumClient.testStep("Enter my last name");

driver.findElement(By.id("Last Name")).sendKeys("Kinsbruner");

reportiumClient.testStep("Enter my email adr");

driver.findElement(By.id("Email")).sendKeys("erank@perfectomobile.com");

reportiumClient.testStep("Enter my phone number");

driver.findElement(By.id("Phone")).sendKeys("+7816652345");

reportiumClient.testStep("Enter my company name");

driver.findElement(By.id("Company")).sendKeys("Eran");

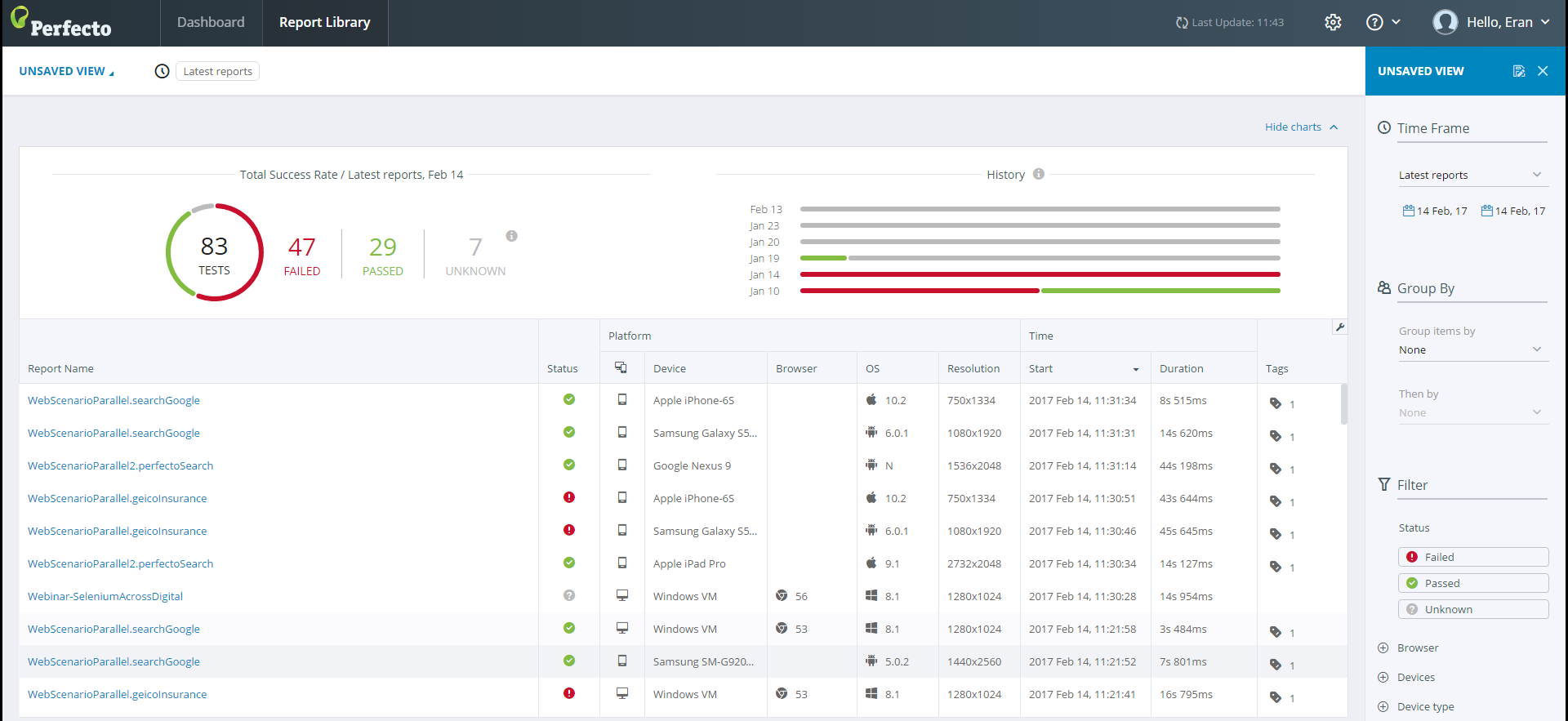

System.out.println("Done: Perfecto Search");When we scale our test suite, we can examine a "non-tag based" report and a "tagged" one. The following figure shows a full test execution of both the Regression and the Perfecto Search test scenarios along with a long list of reports that is hard to navigate and analyze.

When you want to drill down only to the two reports that we tagged above, it is easy to get a subset report and then also drill down.

The following figure shows complete cloud aggregated reports generated through Smart Reporting.

The following figure shows reports filtered by Tags and Failures Only.

The following figure shows reports filtered by failures only on Chrome browsers.

The following figure shows s single test report with logical steps and details for the specific test failure

If you want to investigate and triage the failure, you can easily get additional test artifacts that include environmental details, videos, vitals, network PCAP file, PDF reports, logs, and more (see for example the following figure).

4 | Implement logical steps within the test code

Logical steps are the fundamentals of injecting order and sense into the entire test scenarios. With that in mind, let’s make sure that each test step in your test scenario is well documented per the Reporting SDK so that it appears well in the test reports. As can be seen in the following figure, prior to each step, we document the logical action to make it easy to track when the execution is completed.

If we execute the above example, we can see the logical steps side-by-side as they were developed in Java in the above snippet and drill into every step in the single test report. In addition, clicking a specific logical step brings up the visual for the specific command executed, as seen in the following figure.

Limitations

Perfecto tracks up to 3000 tags. If the number of tags in your system exceeds 3000, Perfecto may not find a tag if you try to filter by it.

Summary

What we have documented above should allow you to shift from using a basic test report - either a legacy Perfecto report, TestNG report, or other - to a more customizable test report that, as we have demonstrated above, allows you to achieve the following outcomes:

- Better structured test scenarios and test suites.

- Use of tags from early test authoring as a method for faster triaging and prioritizing fixes.

- Shifting of tag-based tests into planned test activities (CI, Regression, Specific functional area testing, etc.).

- Easy filtering of big test data and drilldown into specific failures per test, platform, test result, or through groups.

- Elimination of flaky tests through high-quality visibility into failures.

The result is a a methodology-based RTDD workflow that is easier to maintain.

To learn more about Smart Reporting,see Test analysis with Smart Reporting.