This in-report view is part of Insights. It is available for failed tests only, showing bar graphs that depict the complete history of the test case at hand, from its latest to its earliest run. The view provides an at-a-glance visual read of the most likely source for the latest failure, whether the test is unstable or broken. The patterns displayed in the graphs may give you a good idea about where you might want to go next to resolve the issue.

To determine the test history, Perfecto considers the test name and the specified capabilities. If both name and capabilities are the same, Perfecto considers the executions as belonging to the same test. If one capability is different, for example the browser version, Perfecto treats the executions as belonging to a different test. To stick with this example: The execution that ran on a different browser version is omitted from the test failure history of this particular test.

Each row in the Test failure history view represents 14 days, with the current date visible in the top row and aligned to the right. Each bar in a graph represents a day and is divided into the following segments:

-

Successful runs (green)

-

Runs that failed with the same exception as the one included in the underlying single test report (STR) (red)

-

Runs that failed with a different exception (pink)

The dates displayed are based on the browser's time zone.

Watch this short video to see how it works.

Interact: Move the pointer over a bar in the graph to display a tooltip. The tooltip provides the date, the total number of test executions, the number of executions that failed vs. passed, and the code branches that failed during the last test run.

The following table provides clear-cut sample scenarios for failed tests. In reality, of course, cases are often more ambiguous.

|

Scenario |

Description |

Next steps |

Sample graph |

|---|---|---|---|

|

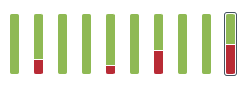

Test flaky |

When the test fails to produce consistent, reliable results over time, this may happen due to issues with the code, the test itself, or other, external factors. For example, certain programs could have been running in the background when the test failed but were not running when the test passed. If a test does not pass consistently, you may need to go back to the test case itself and check if it runs locally. If it does not, fix it, and then come back to this view a day or two later to see if and how the numbers have improved. If the test runs locally but fails when hooked up to CI, the problem may be a different one altogether. |

|

|

|

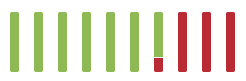

Test failing |

The test itself is stable, but it fails. This could happen because of an error in the code or an issue with the app under test. |

|

|

|

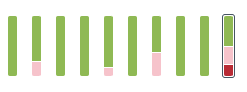

Test flaky and failing |

The test failed intermittently over time. This could be due to flakiness or because different people broke the test at different times. What we know for sure here is that the failure during the latest test run is related to a new issue. In this case, the person who last edited the test possibly broke it. |

|

To investigate further on specific test runs at a given date, you can go back to the Report Library using the link at the top of the widget. Then, look at the failure reason or at the exception that occurred and take it from there.